A Product Development Lifecycle that Works

Sometimes it just happens. You get stuck on a project, you pour your heart into it, you even take the time to make sure that you ask the right questions of the business requirements to make sure that your project is positioned in the right direction to ensure its not a waste of time.

Then the company shifts direction, and what you’ve been working suddenly becomes a waste of time, in spite of your every best effort.

One unfortunate consequence of the Agile Culture is that many people assume that agile means “move first, think later.” As though the fail fast mentality should permeate every facet of how a tech business should operate, people often think that Agile gives you the freedom to begin your walk down the path in any direction, trusting that the process of iteration will eventually right your path. The problem with this line of thinking, is that iteration can sometimes fall into long term divergent behavior.

This concept is borrowed from my experience in biocomplexity. In studying population dynamics its critical where how you start. In other words, given fixed parameters different initial conditions can mean the difference between a population spiraling out of control, or dying off to extinction.

In many ways this paradigm applies to how a project evolves over time. Given the constant of how the engineering team operates, how you get a team started can mean the difference between a quickly executed, useful and even reuseable feature, and a black hole of iterations that lead nowhere and provide little value, like the spirals in the above Hopf bifurcation diagram.

After experiencing the sting of a dead project a few times, I eventually stumbled upon what was the most elucidative blog post I had seen in years:

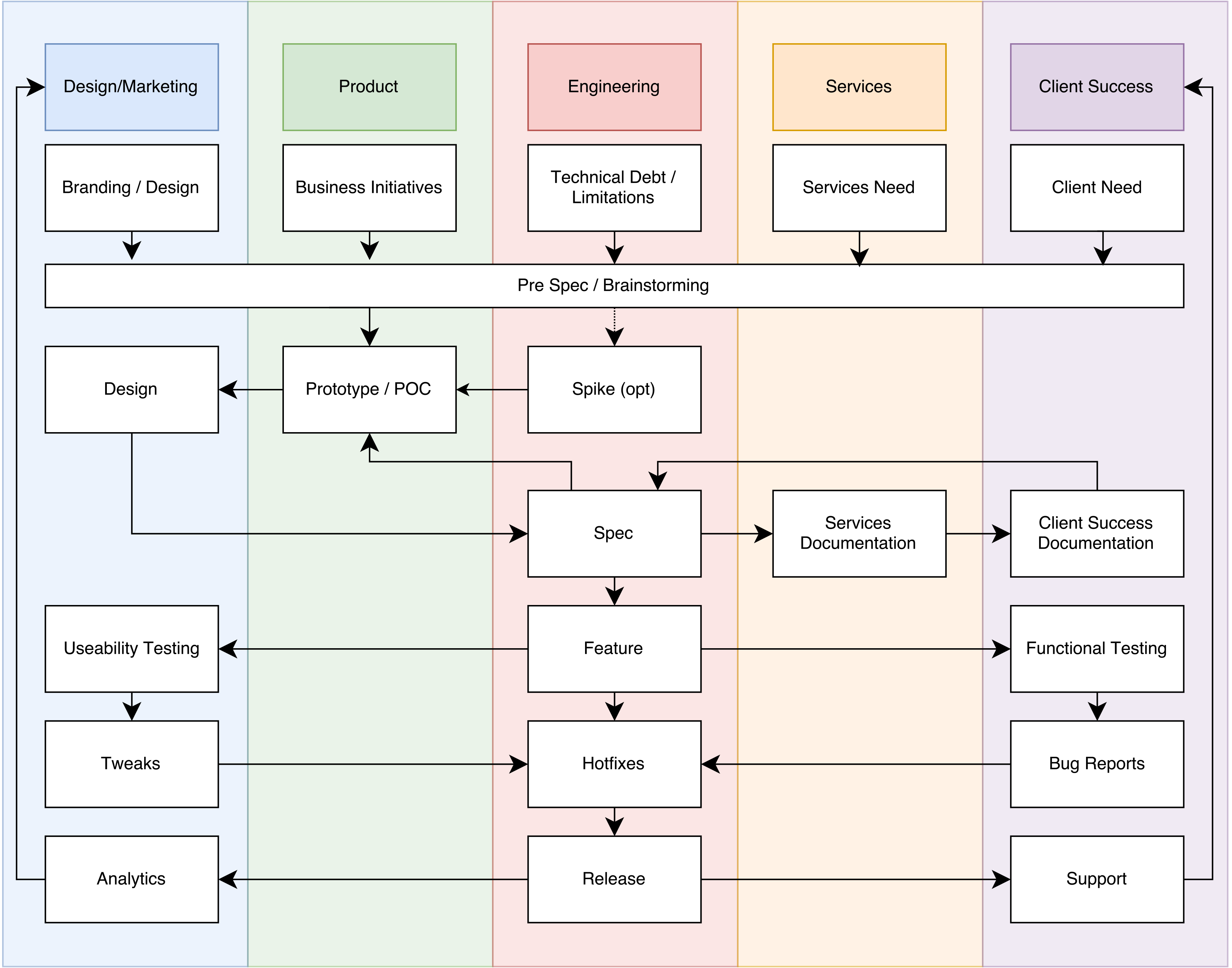

If you get a chance read both parts of this blog post you should, its worth every minute. Suddenly I realized that so many of those system traps I had encountered in my career. But it was hard to avoid many of those traps as an engineer speaking towards product development. There were too many specific instances to keep in mind and too much that would need to change. Eventually I started to put together a picture of a product development system educated by many of those system engineering traps that would provide a context for working with maximal collaboration between the different functions within a tech company. The process I came up with was as follows:

The diagram itself is actually fairly intuitive, and not too far from several other paradigms, including Agile itself. The key differentiator is that it is aimed upon developing a concept upon its consensus within the organization. Too often escalation results in organizations skipping some of these steps in order to deliver something that they view as being business critical. However, by forcing requirements through such a system it allows organizations to know and measure the velocity of their pipeline upon the level of consensus they have achieved within the organization. Here is a description of the above process in plain words:

-

Representatives from each team collaborate in brainstorming sessions to determine a list of “Pre-specs” or the seed of features to be developed within the software. Included in this brainstorming is assessments by engineering of technical feasibility and how particular features build upon one another in the context of their architecture and platform (a blog post on this to come later).

-

If it is unknown even by engineering whether or not a particular feature is technically feasible a Spike might need to be performed by engineering.

-

The next steps go in order of “difficulty or amount of time needed to modify”. Next the idea moves on to Product to be developed into a prototype or proof of concept. This varies depending on the organization but often equates to a high level description of the feature and the user stories it maps too, as well as some basic wireframes and technical descriptions.

-

Next the Prototype is passed off to design (which is more costly to change than the prototype). Design tools that can be easily be shared and collaborated with product and engineering are helpful tools. For this reason I have recently been using Adobe XD because of its presentation view which product can use to visualize the user experience workflow and assess how well it fits the defined user stories.

-

Next the design is passed off to engineering to be turned into a spec. This is the deep dive by engineering to think about technical feasibility of the individual components laid out by product and design. If any issues are encountered then the design is passed back to product to be re-evaluated and then to design to be tweaked. This cycle may continue several times before the Spec is finalized.

-

Depending upon the software product some products may have a heavy or light services component to using the software. For Product oriented SaaS companies this step may be very light or non existent. For more service oriented products this might be crucial, and will give Services a chance to assess how much personnel will be needed to support services engagements laid out in the spec. At this point Services gets a chance to see what services engagements will be needed in the proposed Specification, and while they are assessing also make their internal documentation to perform services tasks.

-

After this checks out they pass it on to client services who does a sanity check on the match between the user needs being escalated by client success and the scope of the Specification. These may iterate a couple more times.

-

The reason that Design/Product are seperated from Services/Client Success is because the first group represents a “planning” phase of the product whereas the later represents an “execution” phase. This separation is important because it presents the execution teams with something to respond to and iterate upon.

-

Once the Spec has been vetted by all the stakeholders in it, the engineering team drives the feature to execution. Separate from automated testing (such as rspec, capybara, selenium), manual Useability and Functional testing are performed by User Experience Design and Client Success, though many organization chose to include functional testing, or at least smoke testing as a responsibility of the engineering team. It often makes more sense for those teams to perform those tests since they will need to be familiar with the issues that may arise, though some organizations think its better for the engineering team to have greater accountability on their work.

-

After those tests are performed follow ups in the form of hotfixes happen.

-

The most important part of the process is remembering that a feature is NOT finished once it ships but rather needs to continue to be monitored and assessed in the form of analytics to determine effectiveness of changes, AB testing/multivariate testing, and support both from Client Success and Services.

In a later blog post I will talk about how this process can be executed rapidly by a small project oriented teams, which I call “cells” or “pods” and how this increases collaboration and accountability, as well as camaraderie within your organization.

Kyle Prifogle

Twitter Facebook Google+